If you haven’t read Part 1 of the guide yet, you might want to go back and read it first.

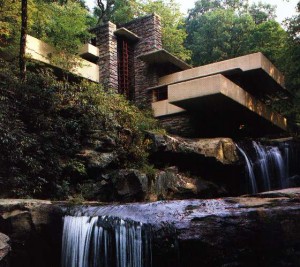

Even if you’re unfamiliar with the work of Frank Loyd Wright, his place in American architecture, or the sheer volume of what he produced, you probably recognize Fallingwater. It was commissioned as a weekend retreat for the Kaufmann family of Pittsburgh, and was completed in 1937 at a cost of about $155,000 (at least $2.6 million in today’s dollars). It was built for a time, and for clients that valued quality or quantity, and appreciated Wright’s organic style. It’s a stark contrast to the modern tract-style McMansion. Let’s contrast Fallingwater with your investment in a phone system.

Phone Systems aren’t beautiful.

They’re not enduring.

They’re not even that unique.

Of the 150 phone systems that I’ve looked in the past year, they’re more than 90% identical. In fact, the only thing more ubiquitous than their feature-sets is the near universal contempt that business owners have for buying phone systems. It’s not hard to see why… phone systems have a reputation of being big, expensive, and frustrating. It’s an epitaph earned in earlier product generations.

As I alluded to in Part I, and elaborated on in my free PDF download, before buying a phone system – you should know what you care about. Not everything mind you… you don’t necessarily need every detail thought-out beforehand (that’s part of why you’re soliciting bids, after all). But at 30,000 feet… if there’s one or two must-have feature requirements for you, be sure you know what those are. Otherwise, if you bring in the integrators who compete in this space – unless you have a very specific need, or have a situation where there’s a clear incumbent, you’re going to find yourself comparing apples and oranges in a market saturated with feature overlap.

Given no constraints, I’m a big fan of ShoreTel’s Unified Communications Platform. As much as I respect Asterisk-based systems, and the contributions that Digium has made to the ecosystem, I can’t help but like ShoreTel’s platform. To the extent that a phone system can be beautifully designed… this one is. The platform runs on a combination of embedded Linux, and VxWorks (it’s the same OS that runs the Mars Curiosity Rover). Interested in five-nine’s? It’s achievable. Geographic failover, high-volume call centers, fancy mobility features (that few people actually use) – all the boxes are checked. In other words, ShoreTel’s platform is the phone-system equivalent to a home designed by Frank Loyd Wright. Or if that analogy doesn’t strike you, than it’s probably the BMW of phone systems.

But here’s the thing… most of us don’t want, or need to live in a home built by Frank Loyd Wright, just most of us don’t need something like a BMW 7-series. More than that though, even with some innovative modern takes on the Prairie style home, and renewed general interest in organic architecture – your average executive is going to pick a turn-key tract-style McMansion in the suburbs near the office, over a Frank Loyd Wright original. Why? Because the tract style McMansion is good enough.

Enter the tract-style McMansion of phone systems.

The Allworx Unified Communications platform was conceived of in 1998 by two engineering executives from Kodak and Xerox who formed what later became known as Allworx. During the first few years of business, while the Allworx system was being developed, the company established a presence doing consulting work for big-name organizations like Kodak, HP, Xerox, and Harris. The Allworx phone system came to market at just the right time in 2002. With the platform positioned as a turn-key VoIP telephony solution, it competed feature-for-feature with many higher-cost alternatives, which were often sold via regional telephony providers. As Allworx grew, it was acquired by a publicly traded Fortune 1000 company, before eventually becoming an asset of Windstream Communications in 2011. If this were a different company, then the story would probably have ended in relative obscurity as nameless corporate asset. But that doesn’t appear to be the fate for Allworx with Windstream… since 2011, Windstream, leveraging their role a telephony provider in some markets, and taken clear steps to increase market penetration for the Allworx’s product line. As a result, the Allworx platform has experienced revenue growth of more than 250% over the past year. As of last check, the Allworx division had 80 employees, and their product continues to win market share from higher-cost alternatives sold by companies Cisco, Avaya, ShoreTel, and others.

Allworx is continuing to win business away from the high-cost Enterprise-focused competitors, by providing an innovative, low-cost, and easy to manage alternative with a rapid and continuous release cycle approach to upgrades and platform improvements.

Perhaps calling Allworx the “tract-style McMansion” of phone systems is the wrong tract, as it’s clearly a low-cost platform. So if ShoreTel is BMW, then I’d venture to call Allworx the Toyota of phone systems. Allworx is the mainstream, mid-sized economy of boring purposeful solutions. It’s not a kit-car, like the FreeBPBX, nor is it a BMW 7-series. But you’ll be hard-pressed to go as far at a comparable level of cost as the Allworx platform will take you. It’s the purpose-built and priced-right unified communications solution for small and mid-sized organizations than want reliability and ease-of-use, without paying a premium for high-end hardware, or obscure feature-sets. Put differently, a client recently contracted me to help them with phone system vendor selection, they were a medium-sized, multi-site organization, and I brought in bids from integrators representing products from Allworx, Cisco, NEC, Digium, as well as a Cloud-based solution.

Allworx had the lowest installed cost.

NEC and Digium solutions ranged 20%-25% higher.

ShoreTel? 2.5X.

Cisco… more than 3X.

Hosted solution? It cost more per year than the Allworx solution.

By that I mean, not only was the installed cost of the hosted solution higher than Allworx, but you could afford re-purchase the entire Allworx solution every single year, for what the hosted solution cost. Now granted, the hosted solution could tolerate a data center failure, since it’s serviced by separate regional datacenters. That was its primary, albeit expensive edge.

How reliable is the Allworx solution?

Availability can be measured in terms of nines of reliability. For example, the platinum standard is five-nines… 99.999%. That’s a cumulative total outage of 5.62 minutes per year. To put that in perspective, last year Google failed to achieve five-nine’s of availability in search. Surprised? Amazon and Netflix fared far worse . In fact, even the public switched phone network fails to reliability achieve five-nines in a given year. There are a lot of reasons why five-nine’s is hard to achieve, but to give you some perspective… the difference between four-nines and five-nines is 47 minutes per year. The cost of targeting five-nines tends to grow exponentially as you approach it. On the other side of the fence, phone system manufacturers love to tout five-nines. And it’s not just a bullet point on their marketing material, because targeting five-nine’s adds complexity, hardware, licensing, and cost. So while you may desire 99.999% availability, something to keep in mind is that even if your system can conceivably this level of availability, make sure you have an obvious business case for it. As in, unless your business lives and dies by the phone (e.g. a busy call center), you might be overbuying. In my experience, my clients that have Allworx systems are generally seeing around 99.9% availability in a given year.

If you require anything resembling five-nines, you shouldn’t buy the Allworx 48X. It’s just not designed with high-availability in mind. Allworx makes no pretense about it… there’s simply no attempt at competing in high-availability space. Which means Allworx can spend their time and resources improving things that the target market values … bug-fixes, usability, and day-to-day feature requests. Perhaps that’s why you can afford to buy a cold-spare Allworx 48X, along with everything else and still have a more competitive offering than the competition does, even before the competition adds high availability capabilities.

Wright’s masterpiece, Fallingwater was completed in a timespan of three years, and overlaps two other of his great works from the late 1930s. The original sketch for Fallingwater was actually drawn up while Kaufmann was on his way to meet with Wright and review the “completed” sketches of Fallingwater. In defense of Wright’s genius (or arrogance), he said that Fallingwater was fully formed in his mind and that he was completely prepared for the meeting before drawing a single line. Perhaps. In comparison, the Allworx platform is the byproduct of more than fifteen years engineering, funded by investors focused on a building an innovative, low-cost, high-value unified communications platform that is essentially turn-key. At the end of the day, Allworx provides you with a mainstream unified communications solution, at a price point that the competition can’t touch.

The above article is a continuation of Part I. Some of the other platforms that were evaluated included Avaya’s IP Office, ShoreTel, Digium’s Switchvox, Trixbox, FreePBX, and Allworx. If you’re interested more of the detail surrounding the vendor selection process, just sign-up for the newsletter here, and you’ll be able to download this article along with my deliverable (which includes some additional commentary). I use the newsletter to send the occasional update, often with content that’s exclusive to the newsletter (e.g. the client-facing deliverable). No spam ever. Unsubscribe at any time.